They had a trust and safety team to do this but dumbfuck laid them off.

Who knew, it turns running a platform full of hate isn’t appealing to advertisers.

I also noticed Community Notes have become far more interested in nitpicking whatever vaguely leftist post they can find while letting blatant conspiracy theories and right-wing propaganda fly by unchecked.

most appeared to have moved from Accenture, a firm that provides content moderation contractors to internet companies

Bruh, Accenture is a consulting company, they provide everything contractors.

Gives you a sense of the thoroughness of the research in this article.

They interviewed someone, that someone mentioned Accenture, and they didn’t even google the company they just put it straight into the article with no checking.

I mean, it’s not wrong, but that’s like saying that twitter is a platform that provides celebrity comments. True, but bruh.

Bruh, do you really think the author doesn’t know who one of the largest IT agencies in the world is? Could it be, rather, that they were dumbing it down for the audience, since it’s, you know, not an article about Accenture, and ended up with some slightly odd phrasing as a consequence?

Nice paywall :/

Also: bypass-paywalls-clean :) I didn’t even notice it was paywalled until I sent the link to a friend and she told me it was paywalled 😆

🤖 I’m a bot that provides automatic summaries for articles:

Click here to see the summary

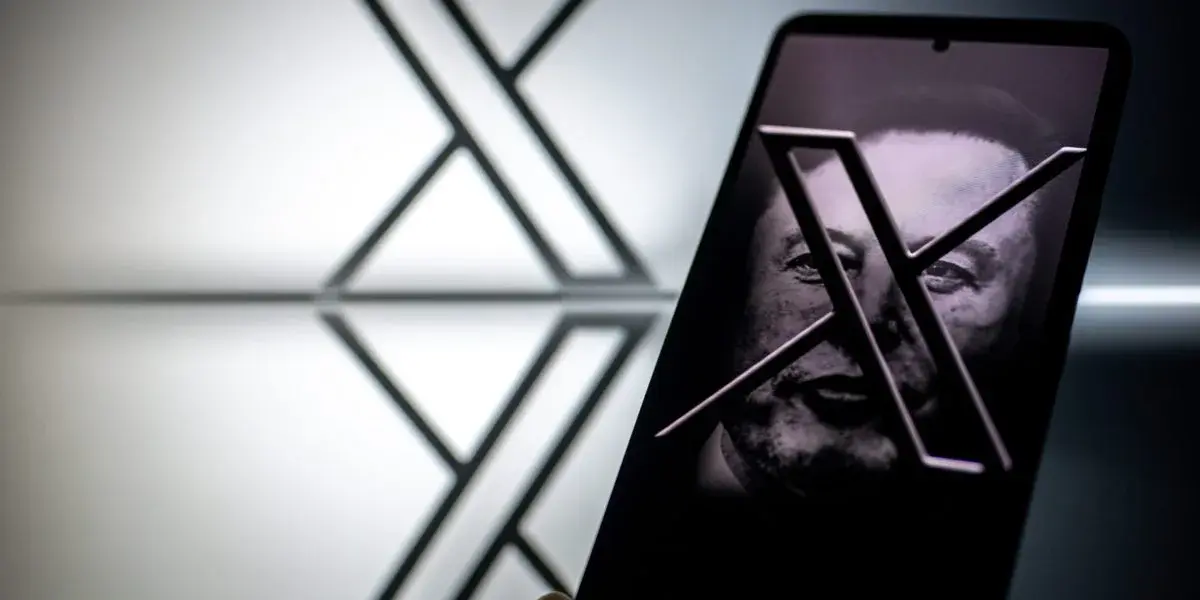

In July, Yaccarino announced to staff that three leaders would oversee various aspects of trust and safety, such as law enforcement operations and threat disruptions, Reuters reported.

According to LinkedIn, a dozen recruits have joined X as “trust and safety agents” in Austin over the last month—and most appeared to have moved from Accenture, a firm that provides content moderation contractors to internet companies.

“100 people in Austin would be one tiny node in what needs to be a global content moderation network,” former Twitter trust and safety council member Anne Collier told Fortune.

And Musk’s latest push into artificial intelligence technology through X.AI, a one-year old startup that’s developed its own large language model, could provide a valuable resource for the team of human moderators.

The site’s rules as published online seem to be a pretextual smokescreen to mask its owner ultimately calling the shots in whatever way he sees it,” the source familiar with X moderation added.

Julie Inman Grant, a former Twitter trust and safety council member who is now suing the company for for lack of transparency over CSAM, is more blunt in her assessment: “You cannot just put your finger back in the dike to stem a tsunami of child sexual expose—or a flood of deepfake porn proliferating the platform,” she said.

Saved 88% of original text.

A for effort.

I keep hearing about toxicity on X, then not seeing it. I’m starting to doubt this premise is true.

I observed racism and toxicity on X. Reported a couple pretty bad posts (eg a user wishing for drowning of immigrants), but the platform decided they’re okay based their own moderation rules.

Can’t decide if this response is Absence of Evidence or Black Swan fallacy. Either way, just because you haven’t experienced something, doesn’t mean its false.

Haven’t been on it since Elon purchased the company. I do know that Twitter by virtue of being powered by who you follow can have a vastly different feel to different people.

If you start getting into political stuff, even in the before times, it could get pretty nasty. I can only imagine that’s worse now.

Try following the blue checkmark folk, and you’ll soon be flooded with toxicity. Alternatively… avoid the blue like the plague it is, and you’ll find very little toxicity.

X is “pay to troll”.

There’s plenty, but there’s toxicity to be found on every platform. I interpret this as people being mad that Trump was unbanned, or maybe some other ideological issue that mainstream Liberals have.

I think the bigger problem with twitter is that the bots have taken over and the content continues to spiral the drain (but again, happens on plenty of platforms).