lightrush

- 12 Posts

- 58 Comments

It’s terrible. With that said this ASRock isn’t that offensive. Other than this thick plastic sheet, the rest looked fine. Very little offensive RGB.

While true for the component itself, there’s material difference for any caps surrounding it. Sure the chipset would work fine at 40, 50, 70°C. However electrolytic capacitors lifespan is halved with every 10°C temperature increase. From a brief search it seems solid caps also crap out much faster at higher temps but can outlast electrolytic at lower temps. This is a consideration for a long lifespan system. The one in my case is expected to operate till 2032 or beyond.

I don’t think other components degrade in any significant fashion whether they run at 40 or 60°C.

Unfortunately I didn’t take before/after measurements but this thick plastic sheet cannot be good for the chipset thermals. 🥲

2·1 month ago

2·1 month agoIt is. I just wish it wasn’t this expensive. Will have to live with it for a while. 😅

1·1 month ago

1·1 month agoSo generally Pegatron. :D I used to buy GB because it was made in Taiwan when ASUS became Pegatron and went to China. Their quality decreased. GB used to put high quality components on their boards in comparison. But now GB is also made somewhere in the PRC. I’ve no idea where MSI are in terms of quality. We used to make fun of them using the worst capacitors back in the 90s/00s. Looking at their Newegg reviews, their 1-star ratings seem lower proportion compared to Pegatron brands and GB. Maybe they’re nicer these days? The X570 replacement I got for this machine is an ASUS - “TUF” 🙄

2·1 month ago

2·1 month agoThey feel a bit like a mix between DSA and laptop keycaps.

4·2 months ago

4·2 months agoWhat do you buy?

18·2 months ago

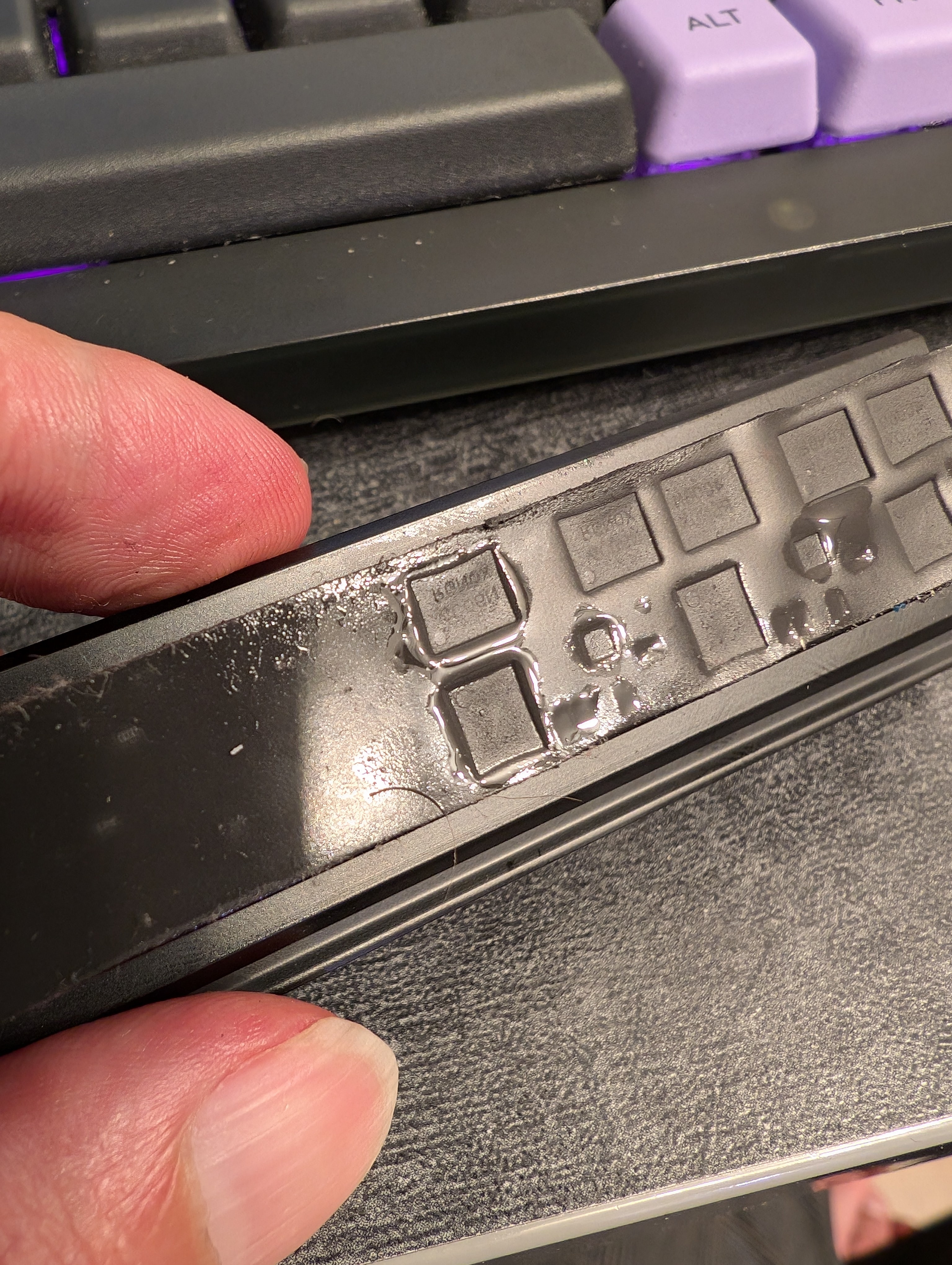

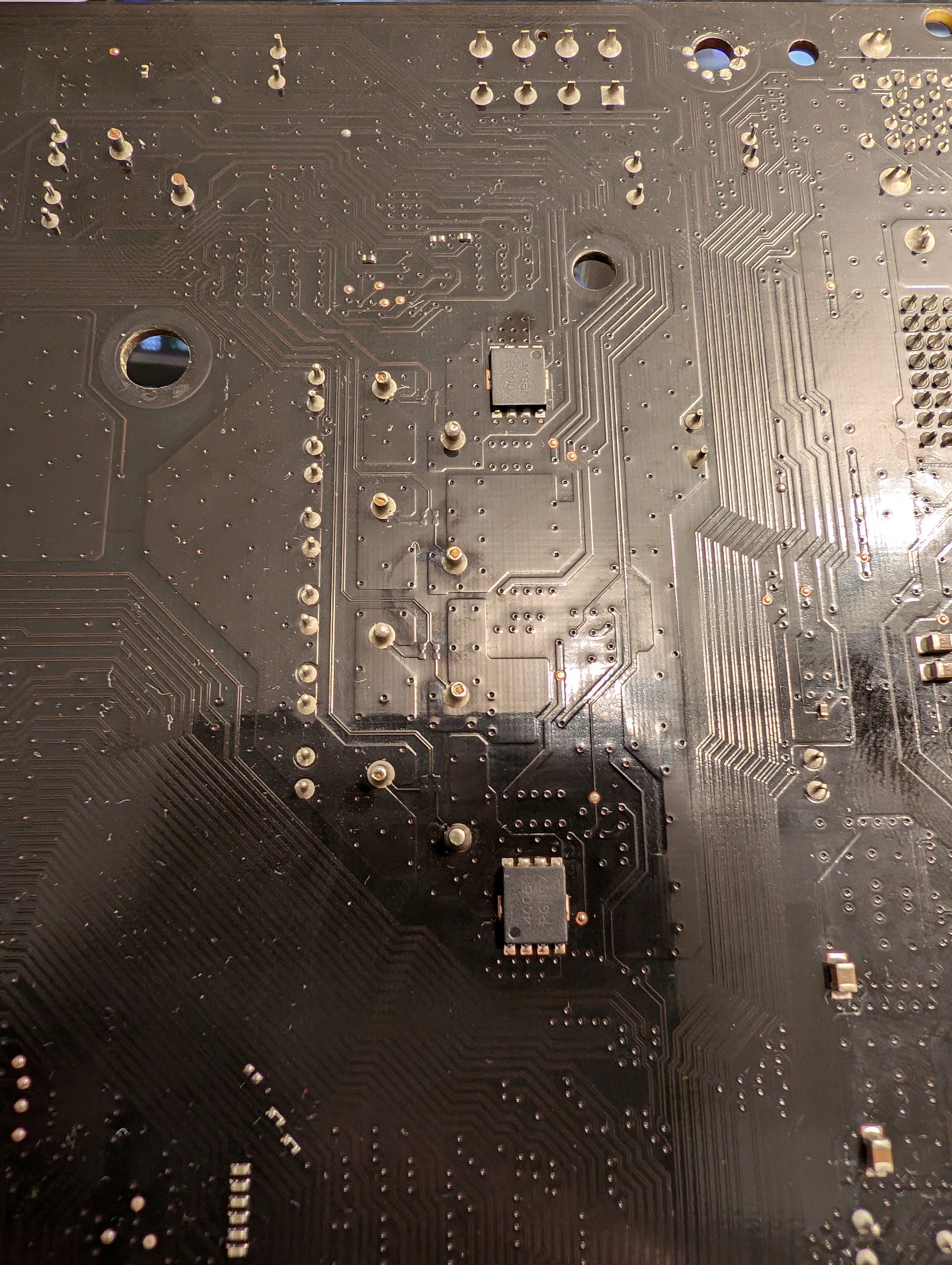

18·2 months agoI think I found the source of the liquid @abcdqfr@lemmy.world. The thermal pad under the VRM heatsink has begun to liquefy into oily substance. This substance appears to have gone to the underside of the board through the vias around the VRM and discolored itself.

Some rubbing with isopropyl alcohol and it’s almost gone:

Perhaps there’s still life left in this board if used with an older chip.

1·2 months ago

1·2 months agoI think the board has reached the end of the road. 😅

231·2 months ago

231·2 months agoHard to say. She’s been in 24/7 service since 2017. Never had stability issues and I’ve tested it with Prime95 plenty of times upon upgrades. Last week I ran a Llama model and the computer froze hard. Even holding the power button wouldn’t turn it off. Did the PSU power flip, came back up. Prime95 stable. Llama -> rip. Perhaps it’s been cooked for a while and only trips by this workload. She’s an old board, a Gigabyte with B350 running a 5950X (for a couple of years), so it’s not super surprising that the power section has been a bit overused. 😅 Replacing with an X570 as we speak.

14·2 months ago

14·2 months agoFunny enough, I can’t detect the smell from hell. Could be COVID.

1·2 months ago

1·2 months agoIiinteresting. I’m on the larger AB350-Gaming 3 and it’s got REV: 1.0 printed on it. No problems with the 5950X so far. 🤐 Either sheer luck or there could have been updated units before they officially changed the rev marking.

1·2 months ago

1·2 months agoOn paper it should support it. I’m assuming it’s the ASRock AB350M. With a certain BIOS version of course. What’s wrong with it?

1·2 months ago

1·2 months agoB350 isn’t a very fast chipset to begin with

For sure.

I’m willing to bet the CPU in such a motherboard isn’t exactly current-gen either.

Reasonable bet, but it’s a Ryzen 9 5950X with 64GB of RAM. I’m pretty proud of how far I’ve managed to stretch this board. 😆 At this point I’m waiting for blown caps, but the case temp is pretty low so it may end up trucking along for surprisingly long time.

Are you sure you’re even running at PCIe 3.0 speeds too?

So given the CPU, it should be PCIe 3.0, but that doesn’t remove any of the queues/scheduling suspicions for the chipset.

I’m now replicating data out of this pool and the read load looks perfectly balanced. Bandwidth’s fine too. I think I have no choice but to benchmark the disks individually outside of ZFS once I’m done with this operation in order to figure out whether any show problems. If not, they’ll go in the spares bin.

4·2 months ago

4·2 months agoI put the low IOPS disk in a good USB 3 enclosure, hooked to an on-CPU USB controller. Now things are flipped:

capacity operations bandwidth pool alloc free read write read write ------------------------------------ ----- ----- ----- ----- ----- ----- storage-volume-backup 12.6T 3.74T 0 563 0 293M mirror-0 12.6T 3.74T 0 563 0 293M wwn-0x5000c500e8736faf - - 0 406 0 146M wwn-0x5000c500e8737337 - - 0 156 0 146MYou might be right about the link problem.

Looking at the B350 diagram, the whole chipset is hooked via PCIe 3.0 x4 link to the CPU. The other pool (the source) is hooked via USB controller on the chipset. The SATA controller is also on the chipset so it also shares the chipset-CPU link. I’m pretty sure I’m also using all the PCIe links the chipset provides for SSDs. So that’s 4GB/s total for the whole chipset. Now I’m probably not saturating the whole link, in this particular workload, but perhaps there’s might be another related bottleneck.

2·2 months ago

2·2 months agoTurns out the on-CPU SATA controller isn’t available when the NVMe slot is used. 🫢 Swapped SATA ports, no diff. Put the low IOPS disk in a good USB 3 enclosure, hooked to an on-CPU USB controller. Now things are flipped:

capacity operations bandwidth pool alloc free read write read write ------------------------------------ ----- ----- ----- ----- ----- ----- storage-volume-backup 12.6T 3.74T 0 563 0 293M mirror-0 12.6T 3.74T 0 563 0 293M wwn-0x5000c500e8736faf - - 0 406 0 146M wwn-0x5000c500e8737337 - - 0 156 0 146M

3·2 months ago

3·2 months agoInteresting. SMART looks pristine on both drives. Brand new drives - Exos X22. Doesn’t mean there isn’t an impending problem of course. I might try shuffling the links to see if that changes the behaviour on the suggestions of the other comment. Both are currently hooked to an AMD B350 chipset SATA controller. There are two ports that should be hooked to the on-CPU SATA controller. I imagine the two SATA controllers don’t share bandwidth. I’ll try putting one disk on the on-CPU controller.

2·7 months ago

2·7 months agoYes, yes I would use ZFS if I had only one file on my disk.

2·7 months ago

2·7 months agoOK, I think it may have to do with the odd number of data drives. If I create a raidz2 with 4 of the 5 disks, even with

ashift=12,recordsize=128K, the performance in sequential single thread read is stellar. What’s not clear is why this doesn’t affect, or not as much, the 4x 8TB-drive raidz1.

100%. At least Timemore made this scale trivial to open and the cell is not difficult to replace.