How are you restricting internet access for it?

How are you restricting internet access for it?

Maybe waiting to see which side comes out on top. Kinda like Volkswagen. (Yes I know I didn’t exactly happen like that)

I worked in the object recognition and computer vision industry for almost a decade. That stuff works. Really well, actually.

But this checkout thing from Amazon always struck me as odd. It’s the same issue as these “take a photo of your fridge and the system will tell you what you can cook”. It doesn’t work well because items can be hidden in the back.

The biggest challenge in computer vision is occlusion, followed by resolution (in the context of surveillance cameras, you’re lucky to get 200x200 for smaller objects). They would have had a really hard, if not impossible, time getting clear shots of everything.

My gut instinct tells me that they had intended to build a huge training set over time using this real-world setup and hope that the sheer amount of training data could help overcome at least some of the issues with occlusion.

That’s not AI tho.

What do you mean?

Earth itself is moving around the sun at about100,000 km/h and the sun is traveling through the galaxy st about 1 million km/h.

So if Marty went back/forward just one hour then he’d be about 1,100,000 kilometers away from Earth in space (or 900,000 kilometers, depending on the orbital direction of Earth relative to the sun’s direction of travel).

And then there’s the motion and speed of the Milkyway itself.

This is all assuming that the layout of the underlying fabric of spacetime is absolute (which it seems to be, outside of expansion).

I’ll bring the snacks!

What ads?

Have you actually used Windows?

I’ll help also

Thanks for that read. I definitely agree with the author for the most part. I don’t really agree that current LLMs are a form of AGI, but it’s definitely close.

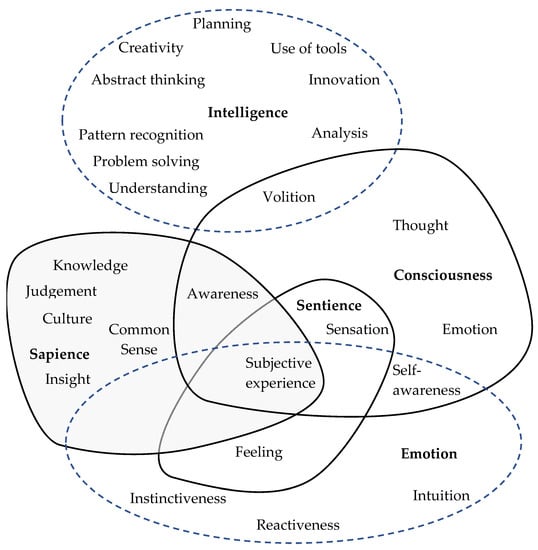

But what isn’t up for debate is the fact that LLMs are 100% AI. There’s no debate there. But I think the reason why people argue that is because they conflate “intelligence” with concepts like sapience, sentience, consciousness, etc.

These people don’t understand that intelligence is a concept that can, and does, exist outside of consciousness.

The most infuriating thing for me is the constant barrage of “LLMs aren’t AI” from people.

These people have no understanding of what they’re talking about.

Edit: to everyone down voting me, look at this image

And is the photo/video generator completely on home machines without any processing being done remotely already?

Yes

Did you really just try to excuse and downplay a company claiming full ownership and rights over all user’s data?

As soon as anyone can do this on their own machine with no third parties involved

We’ve been there for a while now

FR is not generative AI, and people need to stop crying about FR being the boogieman. The harm that FR can potentially cause has been covered and surpassed by other forms of monitoring, primarily smartphone and online tracking.

But I don’t see how you can make the customer go for a ride if the customer doesn’t want to go for a ride.

Don’t hand over the keys on the basis that company requirements for liability mitigation were not met.

I know that sounds like a stretch, but Tesla buyers don’t own their cars. Tesla has control over the system (OTA updates), you “have to” bring it to Tesla for repairs and service, and they’ve even tried to control who can resell a cyberteuck.

You’re basically renting a Tesla at full price.

Most of that is in the kernel anyways.

they literally have no mechanism to do any of those things.

What mechanism does it have for pattern recognition?

that is literally how it works on a coding level.

Neural networks aren’t “coded”.

It’s called an LLM for a reason.

That doesn’t mean what you think it does. Another word for language is communication. So you could just as easily call it a Large Communication Model.

Neural networks have hundreds of thousands (at the minimum) of interconnected layers neurons. Llama-2 has 76 billion parameters. The newly released Grok has over 300 billion. And though we don’t have official numbers, ChatGPT 4 is said to be close to a trillion.

The interesting thing is that when you have neural networks of such a size and you feed large amounts of data into it, emergent properties start to show up. More than just “predicting the next word”, it starts to develop a relational understanding of certain words that you wouldn’t expect. It’s been shown that LLMs understand things like Miami and Houston are closer together than New York and Paris.

Those kinds of things aren’t programmed, they are emergent from the dataset.

As for things like creativity, they are absolutely creative. I have asked seemingly impossible questions (like a Harlequin story about the Terminator and Rambo) and the stuff it came up with was actually astounding.

They regularly use tools. Lang Chain is a thing. There’s a new LLM called Devin that can program, look up docs online, and use a command line terminal. That’s using a tool.

That also ties in with problem solving. Problem solving is actually one of the benchmarks that researchers use to evaluate LLMs. So they do problem solving.

To problem solve requires the ability to do analysis. So that check mark is ticked off too.

Just about anything that’s a neutral network can be called an AI, because the total is usually greater than the sum of its parts.

Edit: I wrote interconnected layers when I meant neurons

LLMs as AI is just a marketing term. there’s nothing “intelligent” about “AI”

Yes there is. You just mean it doesn’t have “high” intelligence. Or maybe you mean to say that there’s nothing sentient or sapient about LLMs.

Some aspects of intelligence are:

LLMs definitely hit basically all of these points.

Most people have been told that LLMs “simply” provide a result by predicting the next word that’s most likely to come next, but this is a completely reductionist explaining and isn’t the whole picture.

Edit: yes I did leave out things like “understanding”, "abstract thinking ", and “innovation”.

It has the classic 3 section style. Intro, response, conclusion.

It starts by acknowledging the situation. Then it moves on to the suggestion/response. Then finally it gives a short conclusion.